how does the work of cycorp and dr. lenat fit with AI. elaborate on history

ChatGPT said:

Cycorp and Dr. Douglas Lenat’s work sit at a foundational and unique point in the history of AI, particularly within the domain of symbolic AI and knowledge representation. Their central effort, the Cyc project, represents one of the most ambitious attempts to create a system that can perform common-sense reasoning, a major challenge in AI even today.

Background: Who is Dr. Douglas Lenat?

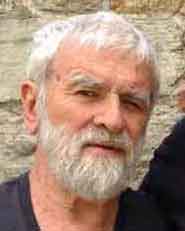

Dr. Douglas Lenat (1950–2023) was a computer scientist and entrepreneur best known for:

- His early work on AM (Automated Mathematician) in the late 1970s — a system that could discover mathematical concepts.

- Founding Cycorp in 1994 to commercialize and continue the development of the Cyc project.

- Advocating for the importance of explicit, human-curated knowledge bases in AI.

He was a pioneer of symbolic AI, which contrasts with modern statistical AI (like neural networks and deep learning).

The Cyc Project: Common Sense AI

Goal: Build a comprehensive ontology and knowledge base of common-sense knowledge, encoded in a logical formalism, so a computer can reason like a human.

Key Features:

- Started in 1984 at MCC (Microelectronics and Computer Technology Corporation), then moved to Cycorp.

- Contains millions of facts and rules about the world: e.g., “You can’t be in two places at the same time” or “When people are hungry, they try to eat.”

- Uses a formal language called CycL (similar to predicate logic) to represent knowledge.

- Enables inference, explanation, and deductive reasoning over symbolic knowledge.

Ambition:

While today’s LLMs like GPT-4 use statistical patterns learned from huge datasets, Cyc was designed to simulate understanding by encoding facts and relationships explicitly.

Historical Role in AI

1980s–1990s:

- Symbolic AI was dominant. Researchers believed the path to general AI was to explicitly teach systems everything they need to know.

- Lenat believed that true AI must know what every person already knows, which meant feeding it massive amounts of human knowledge manually.

- This approach was criticized for being too slow and unscalable, but praised for its theoretical rigor.

2000s–2010s:

- Symbolic approaches like Cyc fell out of favor as machine learning and statistical methods surged in popularity.

- Cyc remained a niche but important project, used in defense, healthcare, and some government applications.

2020s:

- With the rise of LLMs (e.g., GPT, BERT), there has been renewed interest in hybrid models — combining symbolic and statistical AI.

- Cyc’s knowledge base is considered a valuable resource for grounding and interpreting LLM outputs, especially where logic and causality matter.

- Lenat remained active in promoting this hybrid vision until his passing in 2023.

Cyc vs. Modern AI: A Comparison

| Feature | Cyc (Symbolic AI) | Modern LLMs (Statistical AI) |

|---|---|---|

| Knowledge Source | Manually curated facts and rules | Learned from massive text corpora |

| Reasoning | Deductive, logical inference | Pattern recognition, probabilistic |

| Transparency | Highly explainable | Often a “black box” |

| Strengths | Logic, common sense, consistency | Scale, fluency, generalization |

| Weaknesses | Scaling, brittleness, development cost | Inaccuracy, hallucination, lack of logic |

Legacy and Future

Dr. Lenat and Cycorp’s contributions:

- Emphasized the critical role of explicit knowledge in achieving general intelligence.

- Helped define the knowledge engineering paradigm of AI.

- Provided a foundation for future neuro-symbolic AI, where systems like LLMs are paired with logical reasoning engines.

Cycorp still exists, and the Cyc knowledge base is being used in hybrid AI applications — potentially offering the grounding that deep learning systems lack.

Would you like a visual timeline or a summary of how Cyc could be combined with today’s AI (e.g., GPT or other LLMs)?

You said:

yes

ChatGPT said:

Great! Here’s both a visual timeline of Cyc and Dr. Lenat’s contributions and a summary of how Cyc can be combined with modern AI (LLMs) for hybrid systems.

Timeline: Cyc & Symbolic AI History

plaintextCopyEdit1976–1980: AM (Automated Mathematician)

- Lenat develops a system that "discovers" mathematical concepts.

- Marks his shift toward building machines that simulate human-level reasoning.

1984: Launch of the Cyc Project

- Lenat starts Cyc at MCC in Austin, Texas.

- Goal: Build a comprehensive ontology of common-sense knowledge.

1994: Cycorp is founded

- Cyc is spun out into a private company (Cycorp, Inc.) to continue the project and find real-world applications.

1990s–2000s: Knowledge Engineering Era

- Cyc team manually encodes millions of facts and rules (e.g., "Birds can fly", "People usually eat 3 times per day").

- Used in expert systems, defense, and intelligence.

2010s: Rise of Machine Learning

- Statistical approaches like deep learning surpass symbolic methods in many benchmarks.

- Cyc continues under the radar with government and enterprise use.

2020s: Neuro-Symbolic Renaissance

- Researchers seek to combine the best of both worlds.

- Cyc’s structured knowledge and logical reasoning are seen as a complement to the statistical power of LLMs.

2023: Dr. Douglas Lenat passes away

- Renewed reflection on his contributions to AI and the future of hybrid approaches.

2024+: New interest in Cyc as a grounding tool for LLMs

- Integration with large language models to provide logic, causality, and explanation.

How Cyc & Symbolic AI Can Enhance LLMs

Hybrid AI: Symbolic + Statistical = Neuro-Symbolic

Modern LLMs (like GPT-4 or Claude) are brilliant at language generation, but often struggle with:

- Consistency

- Causality

- Deep logical inference

- Understanding context over time

Cyc can help with that. Here’s how:

| Integration Point | What Cyc Adds | How LLMs Benefit |

|---|---|---|

| Common-Sense Reasoning | Deep structured knowledge about everyday facts | Prevents hallucinations, improves judgment |

| Symbolic Inference Engine | Logic-based reasoning over facts and rules | Enables multi-step reasoning beyond pattern matching |

| Ontology & Semantic Graphs | Millions of relations in formal logic (CycL) | Provides structure, definitions, disambiguation |

| Grounding Language | Connects words to real-world concepts with defined properties | Improves accuracy and factuality |

| Explainability | Transparent chain of reasoning | Makes AI systems more trustworthy and auditable |

Example: Combining Cyc with GPT

Prompt to LLM: “Can a cat be in two places at once?”

- LLM might answer “No” based on context patterns — but it might also say “Yes, in quantum physics” if trained on sci-fi.

- With Cyc integration, the model can refer to:

- Rule:

(#$not (#$and (#$spatiallyLocated cat place1) (#$spatiallyLocated cat place2))) - Conclusion: “No, unless you’re discussing quantum phenomena in a metaphorical or speculative context.”

- Rule:

Result: More accurate, context-aware, and explainable response.

Real-World Use Cases for a Cyc + LLM System

- Medical diagnostics: LLM suggests hypotheses; Cyc validates consistency with known causal rules.

- Legal reasoning: Cyc helps enforce logical structure in multi-step arguments.

- Military/intelligence: Combine raw data parsing (LLMs) with policy logic (Cyc).

- Education: Tutoring systems that reason through math or science with step-by-step logic.

Would you like a visual flowchart or architecture diagram of how an LLM + Cyc hybrid system might work?